Archive

SQL Server Support Policy for Virtualization and Failover Clustering updated

Another great news from Microsoft, SQL Support policy is updated. SQL was supported on VM but now building SQL Failover Cluster on VMs is supported. Check this on CSS SQL Server Engineers

Support policy for Microsoft SQL Server products that are running in a hardware virtualization environment

One fairly controversial aspect to this policy was our support (actually non-support is a better word) for “guest” failover clustering. We didn’t support installing SQL Server failover clustering in a virtual machine. Well this policy is now changed effective immediately as updated in the article.

The article now contains the following new wording on this topic:

Guest Failover Clustering is supported for SQL Server 2005 and SQL Server 2008 in a virtual machine for Windows Server 2008 with Hyper-V, Microsoft Hyper-V Server 2008, and SVVP certified configurations provided both of the following requirements are met:

The Operating System running in the virtual machine (the “Guest Operating System”) is Windows Server 2008 or higher

The virtualization environment meets the requirements of Windows 2008 Failover Clustering as documented at The Microsoft Support Policy for Windows Server 2008 Failover Clusters.

Guest Failover Clustering is when you create a SQL Server failover cluster inside a virtual machine where the nodes are running as a virtual machine.

The article on Windows 2008 Failover Clustering has 2 requirements:

- All hardware and software components must be meet “Certified for Windows Server 2008” logo requirements.

- The configuration must pass the Validate test in the Failover Clusters Management snap-in. This is run inside the virtual machine.

Virtualization and Protection Rings (Welcome to Ring -1) Part II

Hyper-V and Ring -1

Under Hyper-V hypervisor virtualization a program known as a hypervisor runs directly on the hardware of the host system in ring 0. The task of this hypervisor is to handle tasks such CPU and memory resource allocation for the virtual machines in addition to providing interfaces for higher level administration and monitoring tools.

Clearly, if the hypervisor is going to occupy ring 0 of the CPU, the kernels for any guest operating systems running on the system must run in less privileged CPU rings. Unfortunately, most operating system kernels are written explicitly to run in ring 0 for the simple reason that they need to perform tasks that are only available in that ring, such as the ability to execute privileged CPU instructions and directly manipulate memory. One solution to this problem is to modify the guest operating systems, replacing any privileged operations that will only run in ring 0 of the CPU with calls to the hypervisor (known as hypercalls). The hypervisor in turn performs the task on behalf of the guest system.

Another solution is to leverage the hardware assisted virtualization features of the latest generation of processors from both Intel and AMD. These technologies, known as Intel VT and AMD-V respectively, provide extensions necessary to run unmodified guest virtual machines. In very simplistic terms these new processors provide an additional privilege mode (referred to as ring -1) above ring 0 in which the hypervisor can operate, essentially leaving ring 0 available for unmodified guest operating systems.

the root partition contains the Virtualization Stack. This is a collection of components that provide a large amount of the Hyper-V functionality. The following diagram provides an abstract outline of the stack:

The following table provides an overview of each of the virtual stack components:

| Component | Description |

| Virtual Machine Management Service (VMM Service) | Manages the state of virtual machines running in the child partitions (active, offline, stopped etc) and controls the tasks that can be performed on a virtual machine based on current state (such as taking snapshots). Also manages the addition and removal of devices. When a virtual machine is started, the VMM Service is also responsible for creating a corresponding Virtual Machine Worker Process. |

| Virtual Machine Worker Process | Virtual Machine Worker Processes are started by the VMM Service when virtual machines are started. A Virtual Machine Worker Process (named vmwp.exe) is created for each Hyper-V virtual machine and is responsible for much of the management level interaction between the parent partition Windows Server 2008 system and the virtual machines in the child partitions. The duties of the Virtual Machine Worker Process include creating, configuring, running, pausing, resuming, saving, restoring and snapshotting the associated virtual machine. It also handles IRQs, memory and I/O port mapping through a Virtual Motherboard (VMB). |

| Virtual Devices | Virtual Devices are managed by the Virtual Motherboard (VMB). Virtual Motherboards are contained within the Virtual Machine Worker Processes, of which there is one for each virtual machine. Virtual Devices fall into two categories, Core VDevs and Plug-in VDevs. Core VDevs can either be Emulated Devices or Synthetic Devices. |

| Virtual Infrastructure Driver | Operates in kernel mode (i.e. in the privileged CPU ring) and provides partition, memory and processor management for the virtual machines running in the child partitions. The Virtual Infrastructure Driver (Vid.sys) also provides the conduit for the components higher up the Virtualization Stack to communicate with the hypervisor. |

| Windows Hypervisor Interface Library | A DLL (named WinHv.sys) located in the parent partition Windows Server 2008 instance and any guest operating systems which are Hyper-V aware (in other words modified specifically to operate in a Hyper-V child partition). Allows the operating system’s drivers to access the hypervisor using standard Windows API calls instead of hypercalls. |

| VMBus | Part of Hyper-V Integration Services, the VMBus facilitates highly optimized communication between child partitions and the parent partition. |

| Virtualization Service Providers | Resides in the parent partition and provides synthetic device support via the VMBus to Virtual Service Clients (VSCs) running in child partitions. |

| Virtualization Service Clients | Virtualization Service Clients are synthetic device instances that reside in child partitions. They communicate with the VSPs in the parent partition over the VMBus to fulfill the child partition’s device access requests. |

In addition to the components contained within the virtualization stack, the root partition also contains the following components:

| Component | Description |

| VMBus | Part of Hyper-V Integration Services, the VMBus facilitates highly optimized communication between child partitions and the parent partition. |

| Virtualization Service Providers | Resides in the parent partition and provides synthetic device support via the VMBus to Virtual Service Clients (VSCs) running in child partitions. |

| Virtualization Service Clients | Virtualization Service Clients are synthetic device instances that reside in child partitions. They communicate with the VSPs in the parent partition over the VMBus to fulfill the child partition’s device access requests. |

Source

http://www.virtuatopia.com/index.php/An_Overview_of_the_Hyper-V_Architecture

Virtualization and Protection Rings (Welcome to Ring -1) Part I

What is protection Rings?!!!

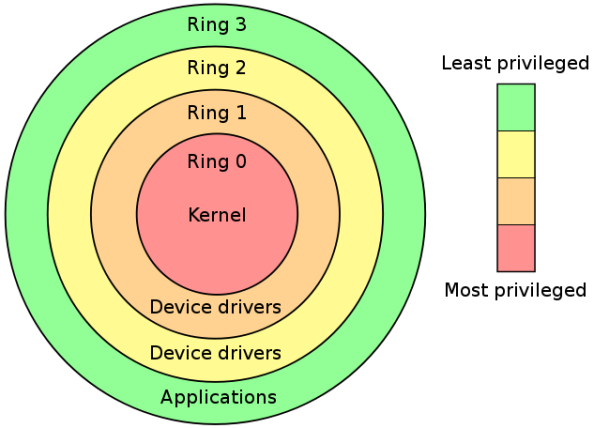

Protection Rings, are a mechanism to protect data and functionality from faults (fault tolerance) and malicious behavior (computer security). This approach is diametrically opposite to that of capability-based security.

Computer operating systems provide different levels of access to resources.

A protection ring is one of two or more hierarchical levels or layers of privilege within the architecture of a computer system. This is generally hardware-enforced by some CPU architectures that provide different CPU modes at the firmware level. Rings are arranged in a hierarchy from most privileged (most trusted, usually numbered zero) to least privileged (least trusted, usually with the highest ring number). On most operating systems, Ring 0 is the level with the most privileges and interacts most directly with the physical hardware such as the CPU and memory.

Special gates between rings are provided to allow an outer ring to access an inner ring’s resources in a predefined manner, as opposed to allowing arbitrary usage. Correctly gating access between rings can improve security by preventing programs from one ring or privilege level from misusing resources intended for programs in another. For example, spyware running as a user program in Ring 3 should be prevented from turning on a web camera without informing the user, since hardware access should be a Ring 1 function reserved for device drivers. Programs such as web browsers running in higher numbered rings must request access to the network, a resource restricted to a lower numbered ring.

x86 CPU hardware actually provides four protection rings: 0, 1, 2, and 3. Only rings 0 (Kernel) and 3 (User) are typically used.

In any modern operating system, the CPU is actually spending time in two very distinct modes:

1.Kernel Mode

In Kernel mode, the executing code has complete and unrestricted access to the underlying hardware. It can execute any CPU instruction and reference any memory address. Kernel mode is generally reserved for the lowest-level, most trusted functions of the operating system. Crashes in kernel mode are catastrophic; they will halt the entire PC.

2. User Mode

In User mode, the executing code has no ability to directly access hardware or reference memory. Code running in user mode must delegate to system APIs to access hardware or memory. Due to the protection afforded by this sort of isolation, crashes in user mode are always recoverable. Most of the code running on your computer will execute in user mode.

Hypervisor mode

The x86 family of CPUs provide a range of protection levels also known as rings in which code can execute. Ring 0 has the highest level privilege and it is in this ring that the operating system kernel normally runs. Code executing in ring 0 is said to be running in system space, kernel mode or supervisor mode. All other code such as applications running on the operating system operates in less privileged rings, typically ring 3.

Under hypervisor virtualization a program known as a hypervisor (also known as a type 1 Virtual Machine Monitor or VMM) runs directly on the hardware of the host system in ring 0. The task of this hypervisor is to handle resource and memory allocation for the virtual machines in addition to providing interfaces for higher level administration and monitoring tools.

Clearly, with the hypervisor occupying ring 0 of the CPU, the kernels for any guest operating systems running on the system must run in less privileged CPU rings. Unfortunately, most operating system kernels are written explicitly to run in ring 0 for the simple reason that they need to perform tasks that are only available in that ring, such as the ability to execute privileged CPU instructions and directly manipulate memory.

A number of different solutions to this problem have been devised in recent years, each of which is described below:

Paravirtualization

Under Paravirtualization the kernel of the guest operating system is modified specifically to run on the hypervisor. This typically involves replacing any privileged operations that will only run in ring 0 of the CPU with calls to the hypervisor (known as hypercalls). The hypervisor in turn performs the task on behalf of the guest kernel.

This typically limits support to open source operating systems such as Linux which may be freely altered and proprietary operating systems where the owners have agreed to make the necessary code modifications to target a specific hypervisor. These issues notwithstanding, the ability of the guest kernel to communicate directly with the hypervisor results in greater performance levels than other virtualization approaches.

Full Virtualization

Full virtualization provides support for unmodified guest operating systems. The term unmodified refers to operating system kernels which have not been altered to run on a hypervisor and therefore still execute privileged operations as though running in ring 0 of the CPU.

In this scenario, the hypervisor provides CPU emulation to handle and modify privileged and protected CPU operations made by unmodified guest operating system kernels. Unfortunately this emulation process requires both time and system resources to operate resulting in inferior performance levels when compared to those provided by Paravirtualization.

Hardware Virtualization

Hardware virtualization leverages virtualization features built into the latest generations of CPUs from both Intel and AMD. These technologies, known as Intel VT and AMD-V respectively, provide extensions necessary to run unmodified guest virtual machines without the overheads inherent in full virtualization CPU emulation.

In very simplistic terms these new processors provide an additional privilege mode above ring 0 in which the hypervisor can operate essentially leaving ring 0 available for unmodified guest operating systems.

Sources :

http://en.wikipedia.org/wiki/Ring_(computer_security)

http://www.codinghorror.com/blog/archives/001029.html

System Center Operations Manager 2007 R2

Microsoft is pleased to announce the release of System Center Operations Manager 2007 R2.

Extending monitoring support from Windows platforms to UNIX and Linux servers and workloads, detailed reporting on service levels, enhanced monitoring capabilities for systems and web applications, and more, the trial for Operations Manager 2007 R2 is now available.

Tech Ed: Windows Server 2008 R2 Hyper-V News!

Today Tech Ed in Los Angeles has announced some great news for all virtualizations fans.

The most amazing part is Processor Compatibility

With Hyper-V R2, we include a new Processor Compatibility feature. Processor compatibility allows you to move a virtual machine up and down multiple processor generations from the same vendor. Here’s how it works.

When a Virtual Machine (VM) is started on a host, the hypervisor exposes the set of supported processor features available on the underlying hardware to the VM. This set of processor features are called guest visible processor features and are available to the VM until the VM is restarted.

When a VM is started with processor compatibility mode enabled, Hyper-V normalizes the processor feature set and only exposes guest visible processor features that are available on all Hyper-V enabled processors of the same processor architecture, i.e. AMD or Intel. This allows the VM to be migrated to any hardware platform of the same processor architecture. Processor features are “hidden” by the hypervisor by intercepting a VM’s CPUID instruction and clearing the returned bits corresponding to the hidden features.

Just so we’re clear: this still means AMD<->AMD and Intel<->Intel. It does not mean you can Live Migrate between different processor vendors AMD<->Intel or vice versa.